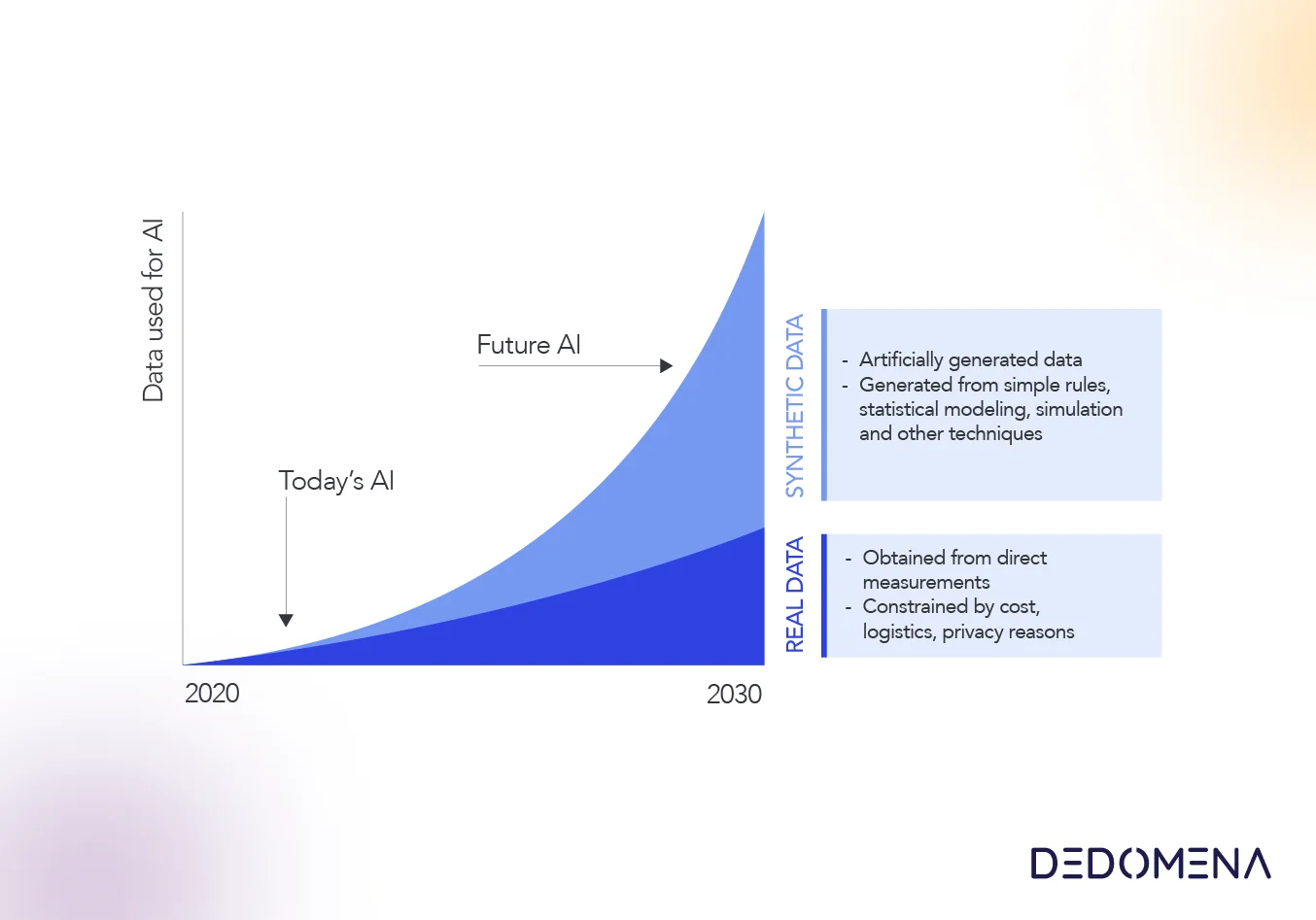

In an era defined by rapid technological progress and the critical role of data-driven decisions, the demand for high-quality and diverse datasets has surged. However, the challenges of accessing real-world data due to privacy concerns, limited availability, or complex collection processes have led to the rise of synthetic data. This resourceful approach enables the creation of reliable, realistic, and privacy-preserving datasets, revolutionizing industries like finance, healthcare, and insurance. By harnessing AI-powered synthetic data generators like Dedomena's NUCLEUS, you can create comprehensive, balanced, and authentic versions of your original data.

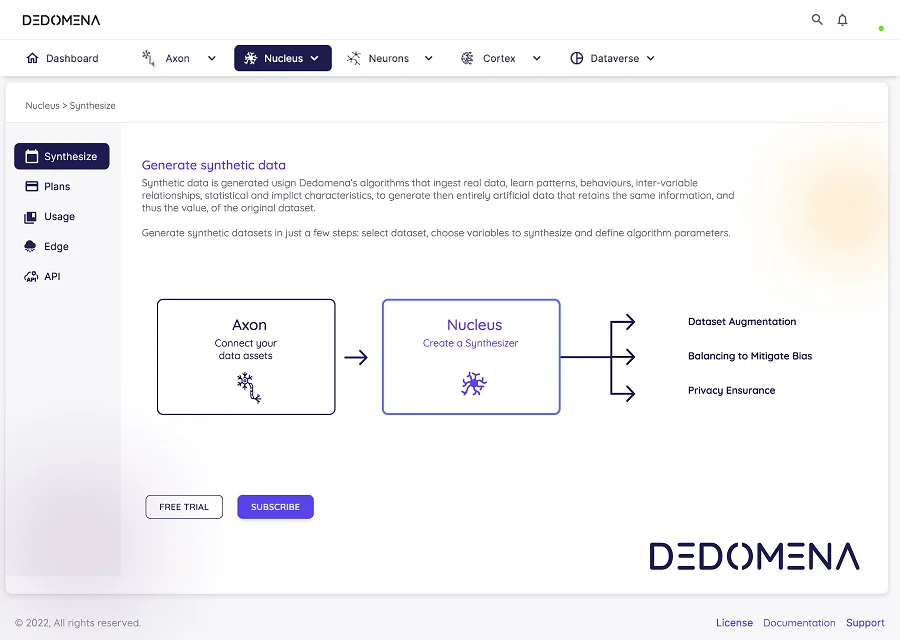

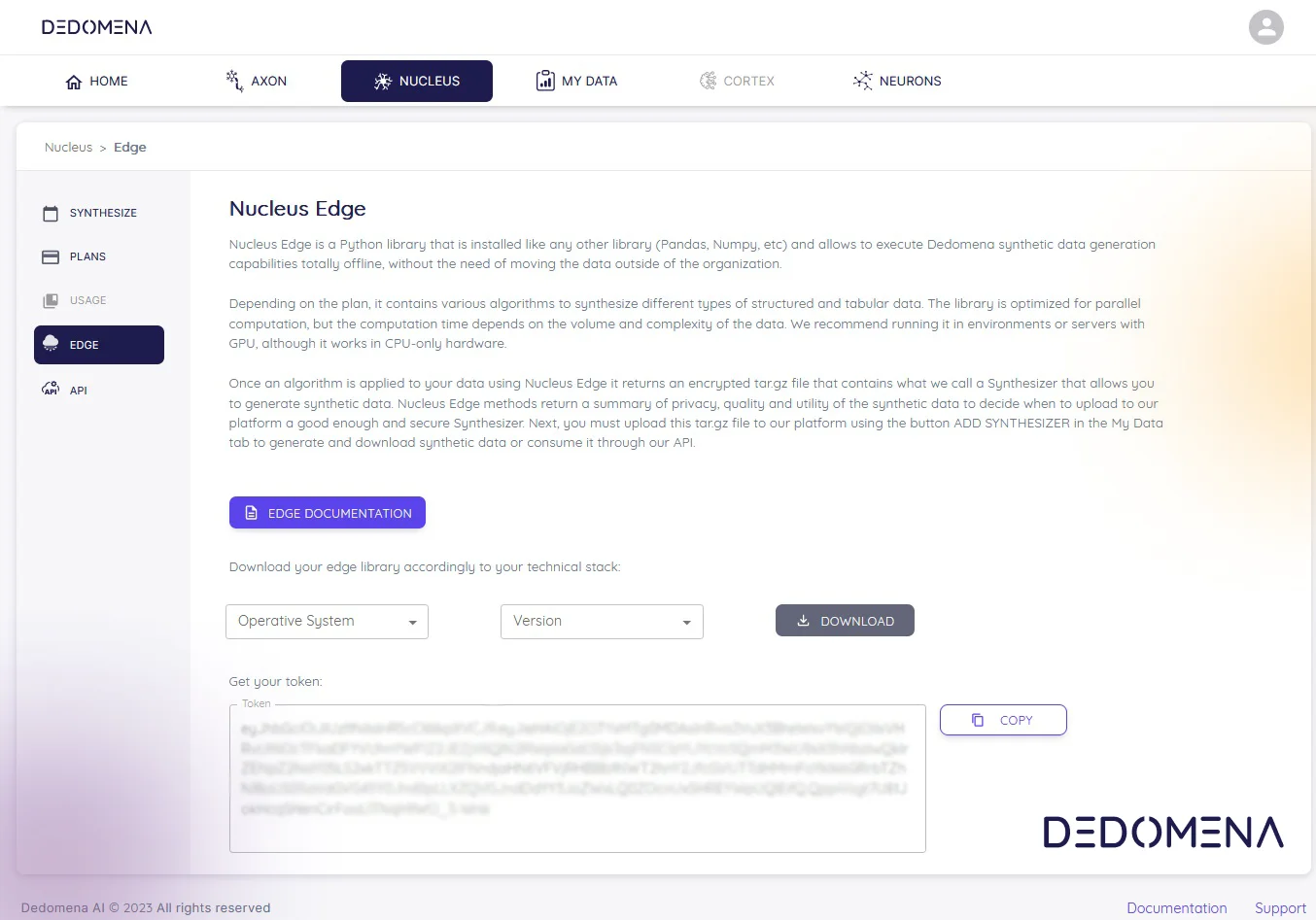

NUCLEUS: The Core Component

At the heart of Dedomena's platform lies NUCLEUS, a pivotal component that facilitates the training of synthesizers for generating limitless synthetic data copies. This cutting-edge technology can be harnessed within Dedomena's platform or via the NUCLEUS EDGE module, empowering seamless data synthesis.

To fully navigate the landscape of NUCLEUS, it's crucial to grasp the fundamental facts that underpin its exceptional capabilities. These facts extend beyond mere features—they form the bedrock for successful AI and software development. As we embark on this journey, we'll delve into 9 essential facts that illuminate the path to mastering synthetic data generation. Each fact plays a pivotal role in harnessing the potential of synthetic data, driving innovations that reshape industries and open doors to uncharted possibilities:

1. Edge Computation: Where Data Synthesis Meets Flexibility

NUCLEUS pioneers edge computation capabilities via the Nucleus Edge library. This enables data synthesis from any location, serving as an invaluable asset for scenarios requiring real-time processing, privacy preservation, or reduced latency. This feature guarantees optimal performance and agility across diverse applications.

2. Privacy-by-Design: Safeguarding Sensitive Information

The foundation of NUCLEUS rests on a privacy-by-design approach. This intrinsic principle ensures that privacy considerations are seamlessly embedded throughout the data synthesis journey. By excluding sensitive details while maintaining statistical accuracy, NUCLEUS crafts synthetic data that respects privacy norms and regulatory mandates.

3. Multi-Table Synthesis: Fidelity in Complex Relationships

NUCLEUS goes beyond single-entity data synthesis. It excels in generating interrelated tables, capturing the intricate relationships present in real-world data. This nuanced approach maintains context and authenticity, crucial for training AI models that interact with multifaceted, interconnected datasets.

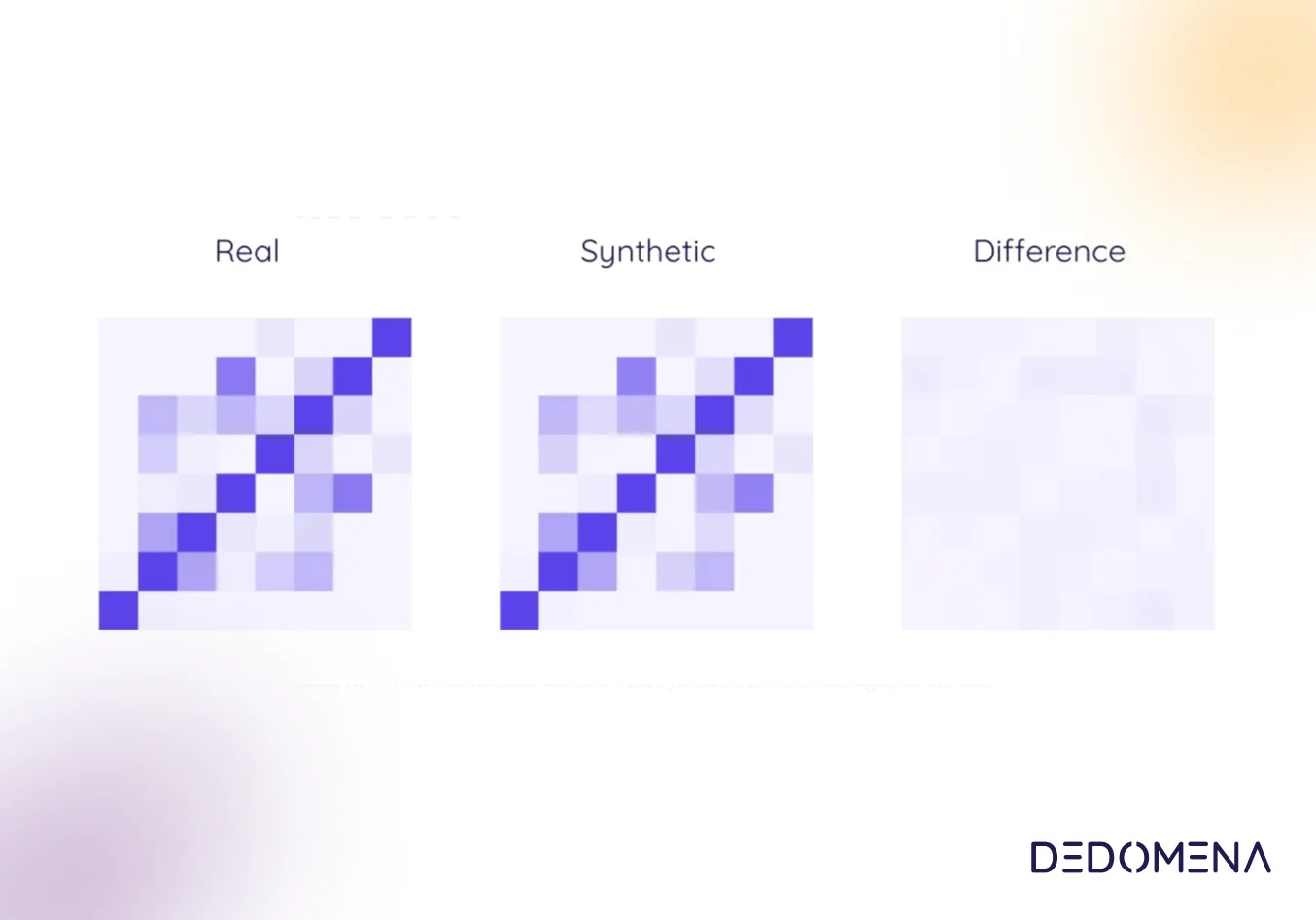

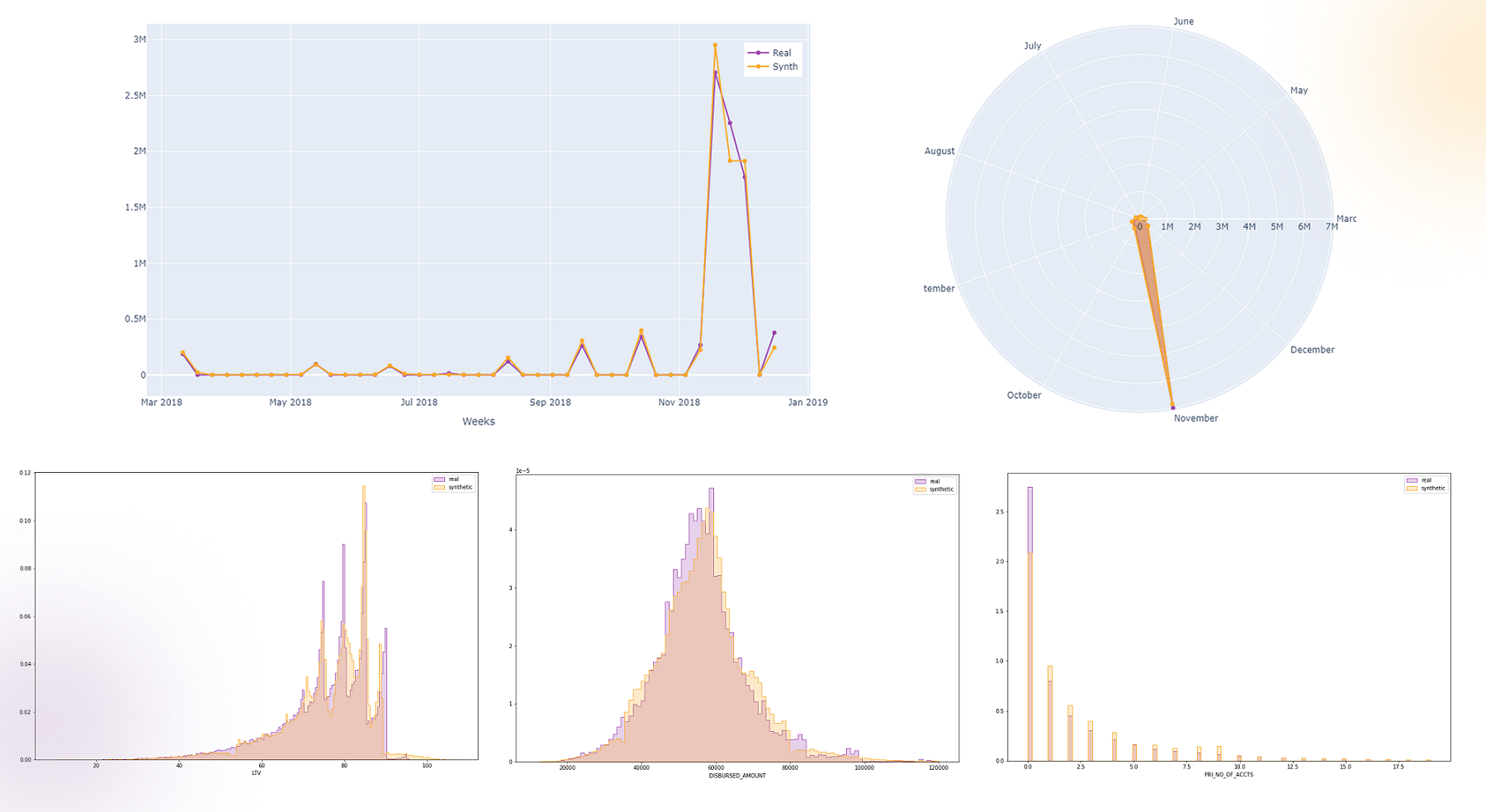

4. High-Quality Data: The Pillar of Effective Training

Quality reigns supreme in NUCLEUS-generated data. By closely replicating statistical properties and data distributions, NUCLEUS ensures that machine learning models glean pertinent insights. This facet elevates model accuracy, yielding dependable outcomes in AI and software development endeavours.

5. GPU Performance Optimization: Speeding Ahead

Handling extensive datasets efficiently is imperative. NUCLEUS harnesses GPU performance optimization to expedite data synthesis. This acceleration translates to rapid experimentation and agile iteration—a competitive edge in the dynamic realm of AI and software development.

6. Specialized Algorithms for Tailored Solutions

NUCLEUS boasts a suite of specialized algorithms, tailored for diverse domains such as transactional data, time series, and natural language processing. This bespoke approach ensures that the synthetic data aligns intricately with your application's unique demands, propelling accurate insights and predictions.

7. Data Diversity: Robustness through Variety

Data diversity combats bias and overfitting. NUCLEUS meticulously simulates an array of scenarios, fostering robust models that can adeptly navigate real-world complexities. This diversity is pivotal for AI systems that excel beyond controlled environments.

8. Quality and Utility Assurance: Elevating Standards

Maintaining data quality and utility is paramount. NUCLEUS rigorously enforces this through a comprehensive Quality Assurance (QA) process. This scrutiny guarantees that the synthetic data faithfully serves its intended purpose, upholding impeccable standards of data integrity.

9. Seamless Integration and Format Diversity: Unifying Platforms and Data Sources

Connecting disparate data sources, platforms, and cloud environments is effortless with NUCLEUS. Its versatility extends to diverse file formats, ensuring compatibility across the data landscape. This feature streamlines the synthesis process, allowing for data-driven insights irrespective of origin.